Introduction

Business owners and web hosts who are new to digital marketing often assume that the term search engine optimization (SEO) applies only to content creation. While it’s true that Google and other search engines do take keywords and content structure into account, these topical factors actually play a relatively small role in determining rank. Technical SEO actually plays a much larger role in determining how easy it is for potential customers or clients to find a company’s site.

The term refers to all the SEO practices that go on behind the scenes, from sitemap construction to robots.txt files and how the website is structured. While the average business owner doesn’t have much idea at all of how these factors influence their websites’ rankings, SEO firms are acutely aware of the important role they play. Most clients won’t be able to sort out their sites’ technical SEO requirements for themselves, but they can still benefit from learning the basics about how the process works.

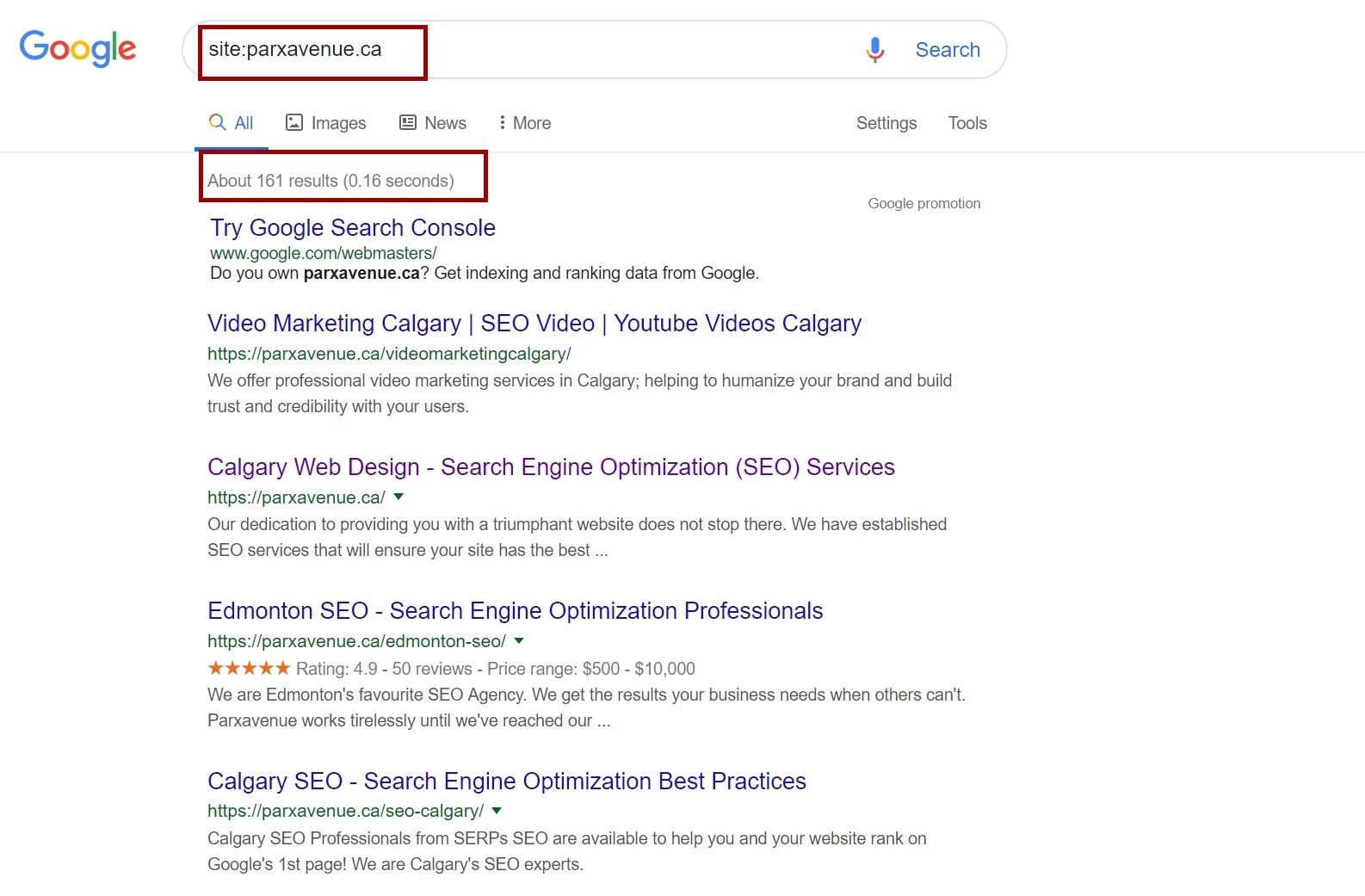

Site Indexing

Web hosts need to keep track of how many of their sites’ pages are being indexed by search engines. Calgary SEO companies do this with the help of SEO crawlers and website auditors to get an idea of how their clients’ pages are performing.

They’ll check the indexing for several search engines. The number should be proportional to the number of pages on the website, minus the pages that are excluded via robots.txt, noindex meta tags, or X-Robots-Tag.

If only a small proportion of the site’s pages are being indexed by one or more search engines, it’s a sign of trouble. The first step consultants will take is to review disallowed pages and ensure that all the site’s important resources are crawlable.

Optimizing Crawl Budget

Checking crawlability isn’t just a matter of looking through robots.txt, although that’s a good start. In order to be indexed properly, all of a page’s resources must be crawlable, not just its content. These include CSS and JavaScript elements.

If a page’s CSS or JS isn’t crawlable, none of its dynamically generated content will be indexed by Google. Sites that rely heavily on JavaScript need to be evaluated using a crawler that can render this type of code, which can pose a challenge to those who aren’t in the know. A Calgary SEO specialist will be able to crawl and render JavaScript appropriately.

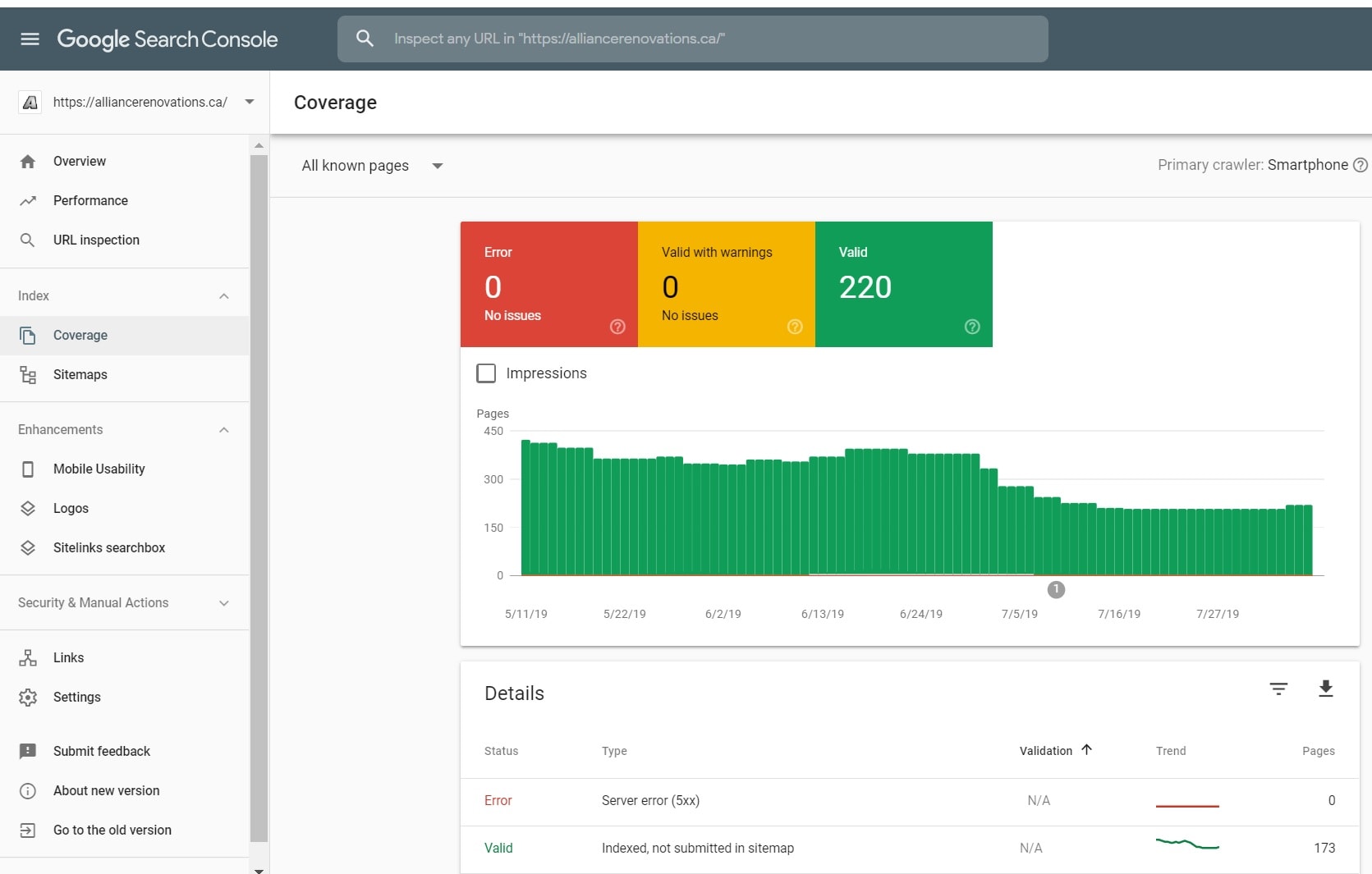

Once all appropriate pages have been made crawlable, a technical SEO specialist will focus on optimizing the site’s crawl budget. This budget is the number of pages that Google and other search engines will crawl during a particular time period. It can be found in the Google Search Console (GSC).

Get Rid of Duplicate Pages

One easy way to optimize a site’s crawl budget is to remove any duplicate pages. Canonical URLs don’t help since search engines will still index both pages and it will still waste the site owner’s crawl budget. They need to be removed completely for this technique to be effective.

Prevent Indexing of Pages Without SEO Value

Save the crawl budget for SEO optimized pages. Pages that contain information like privacy policies, terms and conditions, and old promotions should all be disallowed. It’s also possible to specify URL parameters in GSC. This ensures that Google doesn’t crawl duplicate pages with different parameters separately, leaving more of the crawl budget for relevant pages.

Google Search Console Errors

In addition to crawling issues and robots.txt issues, several other GSC errors can also pop up and prevent pages from being indexed. These include 404 errors, server errors, redirect errors, mobile access errors, and new product issues. These problems will be addressed during the client’s crawl budget audit, including all the errors that happen automatically when creating new sites or updating existing ones.

Technical SEO is your website’s foundation

Optimizing Structure

Site structure is an important element of technical SEO. The site should be shallow in structure for easier crawlability and should have plenty of internal links to boost page rank and make it easier for users to get around.

The first step to improving site structure is to perform an internal link audit. This involves checking the click depth to ensure that no essential pages require over three clicks from the site’s home page, removing broken links, and reducing redirects.

Reviewing Sitemaps

Sitemaps are important for both users who need to navigate around the website and search engines crawling it. In the context of SEO, they convey important information about the site’s structure and allow them to find and list new content.

It’s important to update XML sitemaps every time new content is added to the site and to keep them clear of clutter. Make a point of removing 4XX pages, non-canonical pages, and non-indexed pages from the sitemap. Failing to do so can lead some search engines to ignore it completely, so stay on top of sitemap updates.

Try to keep the sitemap short and concise. Google will only crawl 50,000 URLs, but that’s the extreme upper limit of what site managers should aim for. Simpler sitemaps with fewer links will yield more effective crawls and ensure that the website’s most important pages will be crawled more frequently.

Site Architecture

Site architecture refers to the format of a website, including which of its pages are being crawled for and which of them are disallowed. Optimizing a site’s architecture involves finding metadata opportunities, optimizing URL structure, improving the sitemap, and a variety of other complex tasks. It’s always best to leave this heavily technical work to the pros.

Structured Data

The term structured data refers to any data that is organized on the site. In the context of SEO, though, it refers specifically to using markups on web pages to provide details about their content. The point of using structured data is to improve search engines’ abilities to read the content and to enhance the website’s SERPs results.

One great thing about optimizing structured data is that all the major search engines support the same standardized list of attributes and entities. This list is known as Schema.org. Not all digital marketers use Schema.org structured data markup, but those that do not are missing out on a great opportunity.

Some advantages of using Schema.org structured data markup include improved rich search results, knowledge graphs, access to rich cards for mobile users, and rich results for accelerated mobile pages. These enhanced search results also come with the added benefits of improved click-through rates (CTR) and additional traffic. After all, the same features that improve search engine rankings also improve user experience.

Mobile Responsiveness

It’s no longer breaking news that Google implemented a mobile-first web index, but not all business owners know exactly what that means. It means that they index the mobile-friendly versions of sites prior to or even instead of indexing their desktop versions, with the implications that mobile-friendly pages should rank higher in overall search results.

SEO experts make a point of testing all of their clients’ pages for mobile-friendliness and tracking mobile rankings. They’ll also have to run a separate comprehensive audit of the mobile site along with the desktop version. This requires custom user agent robots.txt settings.

Given that the majority of internet users browse on their phones these days, there’s no excuse for failing to optimize a site for mobile use. It’s not just arguably one of the most important elements of technical SEO but is also good practice for attracting new customers and clients.

If they can’t access certain pages or find that they’re difficult to view on their phones, visitors to a website will not wait until they get home and check the site on their computers. They will leave and look for services or products elsewhere.

Page Speeds

Page speed should be a top priority for every site owner. Slow loading times can have a dramatic negative impact on both user experience and search engine rankings. Every element on the page needs to be optimized for speed, including images, videos, and script. Google offers a Page Speed Insights tool! GTmetrix will also help you figure out any page speed related issues.

Calgary Search Engine Optimization

Get Help Now

All the information found above can feel a little overwhelming to business owners who aren’t particularly tech-savvy, but the reality is that they’re only beginning to scratch the surface of technical SEO. That’s why it’s so important to work with a specialist who knows the ins and outs of this highly specialized field.

Business owners need a company they can trust to optimize every aspect of their sites, including those listed above. Each backend element must be properly optimized and data must be gathered to ensure that all the tricks of the trade are paying off. With a bit of professional help, business owners will not only find that their pages are ranking higher in Google and other search engines, but also that their CTRs, site traffic, and customer conversion rates will all improve as a result.

Don’t be put off by all the internet jargon and the complex technical procedures. Call (403) 383-0153 to speak with a dedicated technical SEO specialist who can help today.